HomeFeatures

I spoke to an Nvidia AI NPC, and he mainly wanted to get me bladderedIn conversation with the cocktail-obsessed gamepeople of our supposed future

In conversation with the cocktail-obsessed gamepeople of our supposed future

Image credit:Rock Paper Shotgun

Image credit:Rock Paper Shotgun

If the purpose of a tech demo is to induce a flash of thinking “Hey that’s neat,” then I’d be lying if I said Nvidia’s Covert Protocol – a playable showcase for theirAINPC tool, Avatar Cloud Engine (ACE) – hadn’t worked on me. If, on the other hand, it’s to develop that thought into “Hey, I want this in games right now,” it’s going to take more than a slightly stilted natter with an aspiring bartender.

ACE, if you haven’t caught its previous showings on the tech/games trade show circuit, is an all-in-one “foundry” of AI-powered character creation tools – language models, speech, text-to-speech, automated mouth flapping and so on – thatNvidia is pitching as the futureof NPC interaction. Plugged into the third-partyInworld Engine, which seems to handle the bulk of the actual AI generation, ACE aims to replace pre-written and recorded character dialogue with more dynamic lines that can respond accurately to any questions or statement you can mutter into a microphone.

NVIDIA ACE | NVIDIA x Inworld AI - Pushing the Boundaries of Game Characters in Covert ProtocolWatch on YouTube

NVIDIA ACE | NVIDIA x Inworld AI - Pushing the Boundaries of Game Characters in Covert Protocol

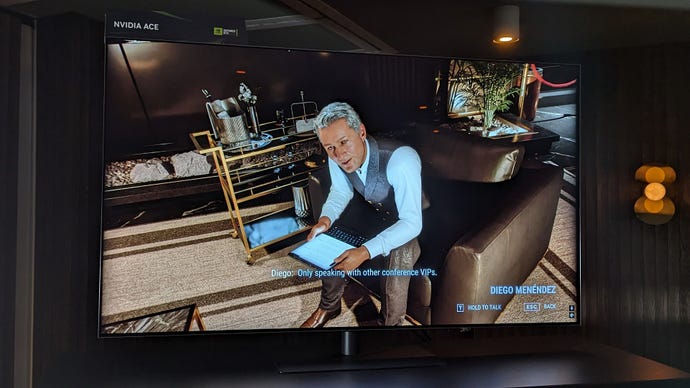

Covert Protocol wraps all that up into a short social investigation game, tasking you – a private detective – with schmoozing/lying your way into a fancy hotel’s private suites. First up for an AI-assisted interrogation was cheery bellboy Tae Hyun, and first among a gathering of journos to hit the mic, it turned out, was me.

It was weird. But it also… worked? As a game interaction, our question and answer session progressed perfectly logically. AI-generated answers were specific to the question, not hedged, and there were no “Could you repeat that?” or “I’m not sure what you mean” hiccups. Had someone walked in with no knowledge of the machine learning aspect, they might well have just thought ACE was feeding canned lines in response to preplanned queries. So yes, it was neat. A lot of responses even seemed to be taking previous responses into account, using them as context to avoid repetition.

Mostly, anyway. That man wasconsumedwith Baltimore Zoo pride, slipping in endorsements to answers about his political views or his relationship with his mum like he was doing an SNL bit. In fairness, the segues were cheesy, but not entirely non-sequiturs, given I’d broached the subject of drinks to begin with. It’s also possible that this was more of an intentional character tic, of the AI’s making, than a glitch in the Matrix.

This guy doesn’t seem to love anything, except being a jerk. |Image credit:Rock Paper Shotgun

Still. I laughed at the time, but in hindsight there was something off about how readily this guy circled back to the exact same topic of his signature tipple. If meant as quirky joke, it was tonally out of whack with the slick detective story presentation, and if it wasn’t, then surely it betrayed how quite literally artificial these ‘performances’ are. As if ACE was clinging to this character detail like a safety blanket, scared of deviating when it knows it’s got the cocktail thing down, even when doing so makes everything seem more robotic – not less.

Not that the masquerade was particularly well-maintained elsewhere. ACE’s AI voices were more naturalistic than those of last year’s ramen shop demo but were still stiff, monotonous, and peppered with strange pauses and pronunciations. All the classic text-to-speech tells, basically. Tae spoke of his family leaving Korea “for a reason,” implied to be North Korean aggression, with the same gravity as when he cracked the rubbish joke about aliens. Another of Covert Protocol’s chattable NPCs, a bigshot keynote speaker from whom we needed to extract a room number, reacted with the same non-surprise both to a friendly greeting and to being told his speech was cancelled.

Some responses also only came after an extended pause, which an Nvidia handler quickly attributed to the venue’s slow Wi-Fi – so even if this whole system made it into a finished retail game, it would presumably be an always-online affair, relying on your ownership of a high-quality connection to make all these remote queries to Inworld.

NVIDIA ACE for Games Sparks Life Into Virtual Characters With Generative AI

Then there’s the writing. Nothing I heard curled my toes like the stinking dialogue ofUbisoft’s NEO NPCdemo (even if, worryingly, that’s also based on a combination of Inworld and Nvidia tech), but it was also kind of justthere. No sparkle, no playfulness, no real weight to the words. I did laugh at Tae’s incessant booze hype but only over the stark ridiculousness of it, not at any of the machine’s more intentional humour. And its attempt at a grizzled private dick-speak voiceover amounted to a tragically bland “A bar. Could go for an Old Fashioned right about now. But focus, Marcus, focus.“Nobody Wants to Die, it ain’t.

Half-Life 2 RTX gives an old classic the full (if unofficial) remaster treatment, |Image credit:Rock Paper Shotgun

Yet there’s clearly a huge gap between having AI perform anti-aliasing, or touch up some brickwork, and going all-in on generative AI to recreate the behaviour of an entire human-ass being in real time. ACE has made progress since the iffy ramen shop visit, but still, I’m not convinced it’s ready to jump that chasm quite yet.

And even when it does, will enough players actually want to hear what AI voices have to say? For all the novelty of dynamic dialogue, the desire for emotive, moving, funny, scary, saddening, surprising stories isn’t going anywhere, and if Covert Protocol is any indication, the best ones willstill be spun from human hands.